Introduction

Implement Least Recently Used cache is one of the most common coding interview questions. In the real world, LRU policy can be used as one of the page replacement algorithms in virtual memory management. In this blog post, I would like to share my LRU cache implementation in Go. As the name implies, since we have limited the size of the cache, LRU is a cache replacement policy that we remove the least recently used element when we try to push a new element into the cache which has zero capacity. We will design,

Constructor(n): Initialize an LRUCache with a capacity of n.Get(key): Get the value (will always be positive) of the key if the key exists in the cache, otherwise return -1. Each element has a distinct key. We will save all elements we have into a hash map for $\mathcal{O}(1)$ constant time element lookup.Put(key, value): Set or insert the value if the key is not already present. When the cache reached its capacity, it should invalidate the least recently used item before inserting a new element. We will save all key-value pairs in a doubly linked list for $\mathcal{O}(1)$ element deletion and insertion.

A glance of doubly linked list in Go

Of course, you can implement your own doubly linked list. However, I think the idiomatic way is to use the container/list library in Go. Here is the definition of list.List.

type List struct {

root Element // sentinel list element, only &root, root.prev, and root.next are used

len int // current list length excluding (this) sentinel element

}

The node in the doubly linked list, list.Element is defined as,

type Element struct {

// Next and previous pointers in the doubly-linked list of elements.

// To simplify the implementation, internally a list l is implemented

// as a ring, such that &l.root is both the next element of the last

// list element (l.Back()) and the previous element of the first list

// element (l.Front()).

next, prev *Element

// The list to which this element belongs.

list *List

// The value stored with this element.

Value interface{}

}

Data structure design

Since list.List.Element.Value can be any type, a Pair struct I created will be used as list.List.Element.Value.

// Pair is the value of a list.List.Element.

type Pair struct {

key int

value int

}

LRUCache struct is as follows.

type LRUCache struct {

cap int // capacity

l *list.List // doubly linked list

m map[int]*list.Element // hash table for list.List.Element existence check

}

Let’s go through our approach by looking at some concrete examples.

At first, we will initialize a new LRUCache instance by calling obj := Constructor(2). So we create a hash map with the length of 2 as well as an empty list.List.

Then we call obj.Put(1, 10). Since the capacity of our list.List is larger than zero; we don’t have to remove the least recently used element. At the same time, we didn’t find any element with a key 1 in our hash map so set the value of key 1 as a pointer points to the list.Element we just created.

Next, we call obj.Put(2, 20). Still, the capacity of our list.List is larger than zero. We create a new List.Element and push it in front of the first List.Element we created before and set the value of key 2 as a pointer points to the new list.Element.

Let’s see if we have key 1 by calling obj.Get(1). We do find key 1 in our hash map. Since we just visit List.Element with key 1, we have to move it to the front of our linked list.

What may happen when we call obj.Put(3, 30)? list.List is full now so we have to remove the least recently used list.Element. In this case, the least recently used element is the list.Element with key 2. We clear the entry has key 2 in our hash map and delete that list.Element from list.List. Finally, we can insert a new list.Element with Value.Pair{key: 3, value: 30} in front of the first list.Element.

Let’s check if we have list.Element with key 2 by calling obj.Get(2). Nothing should happen if we could not find key 2 in our hash map.

This time we call obj.Put(4, 40). List.Element to be removed is the one with key 1. Insert list.Element with Value.Pair{key: 4, value: 40} to the front of list.List and update the hash map.

Nothing happened when we call obj.Get(1). We removed the List.Element with key 1 in the last step.

Let’s check if the List.Element with key 3 is still in the list.List by calling obj.Get(3). We find this List.Element in our hash map so we move it to the front of list.List.

Now everything looks clear. Let’s jump into the coding part.

The code

package main

import (

"container/list"

"fmt"

)

type LRUCache struct {

cap int // capacity

l *list.List // doubly linked list

m map[int]*list.Element // hash table for checking if list node exists

}

// Pair is the value of a list node.

type Pair struct {

key int

value int

}

// Constructor initializes a new LRUCache.

func Constructor(capacity int) LRUCache {

return LRUCache{

cap: capacity,

l: new(list.List),

m: make(map[int]*list.Element, capacity),

}

}

// Get a list node from the hash map.

func (c *LRUCache) Get(key int) int {

// check if list node exists

if node, ok := c.m[key]; ok {

val := node.Value.(*list.Element).Value.(Pair).value

// move node to front

c.l.MoveToFront(node)

return val

}

return -1

}

// Put key and value in the LRUCache

func (c *LRUCache) Put(key int, value int) {

// check if list node exists

if node, ok := c.m[key]; ok {

// move the node to front

c.l.MoveToFront(node)

// update the value of a list node

node.Value.(*list.Element).Value = Pair{key: key, value: value}

} else {

// delete the last list node if the list is full

if c.l.Len() == c.cap {

// get the key that we want to delete

idx := c.l.Back().Value.(*list.Element).Value.(Pair).key

// delete the node pointer in the hash map by key

delete(c.m, idx)

// remove the last list node

c.l.Remove(c.l.Back())

}

// initialize a list node

node := &list.Element{

Value: Pair{

key: key,

value: value,

},

}

// push the new list node into the list

ptr := c.l.PushFront(node)

// save the node pointer in the hash map

c.m[key] = ptr

}

}

func main() {

obj := Constructor(2) // nil

obj.Put(1, 10) // nil, linked list: [1:10]

obj.Put(2, 20) // nil, linked list: [2:20, 1:10]

fmt.Println(obj.Get(1)) // 10, linked list: [1:10, 2:20]

obj.Put(3, 30) // nil, linked list: [3:30, 1:10]

fmt.Println(obj.Get(2)) // -1, linked list: [3:30, 1:10]

obj.Put(4, 40) // nil, linked list: [4:40, 3:30]

fmt.Println(obj.Get(1)) // -1, linked list: [4:40, 3:30]

fmt.Println(obj.Get(3)) // 30, linked list: [3:30, 4:40]

}

Summary

In this blog post, we implement an LRU cache in Go. As I said at the beginning, LRU policy is widely used in operating system design. Although, LRU policy in page replacement algorithm is usually considered too expensive because of the frequency of memory references. In a modern operating system, find the absolute least recently used page among millions of pages is not realistic. As a result, LRU approximation, for example, clock replacement algorithm is introduced to avoid the overhead of LRU. You can read more about the Linux memory management here.

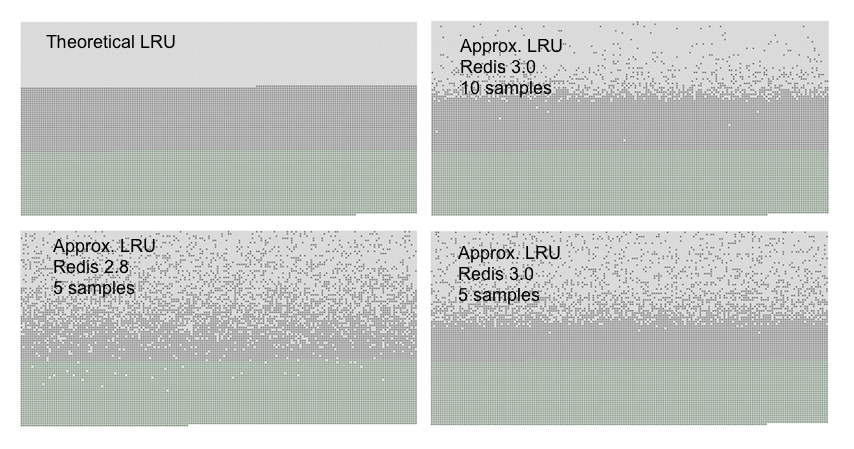

A similar workaround can be found in Redis memory eviction policy implementation. You can actually control the precision of Redis LRU algorithm by changing the maxmemory-samples parameter. The following is a graphical comparison of how the LRU approximation used by Redis compares with true LRU.

- The light gray band are objects that were evicted.

- The gray band are objects that were not evicted.

- The green band are objects that were added.

It turns out that the LRU approximated algorithm works very well in Redis 3.0 when the samples size is 5. You can read more about using Redis as an LRU cache here.

References

- My LeetCode solutions written in Go on GitHub.

- LRU Cache on LeetCode

- Package list

- Implement An LRU Cache - The LRU Cache Eviction Policy

- Using Redis as an LRU cache